How MDPs are redefining condition-based maintenance

GA, UNITED STATES, November 12, 2025 /EINPresswire.com/ -- Condition-based maintenance (CBM) focuses on scheduling interventions according to the real-time health state of a system, offering economic advantages over traditional time-based approaches. However, optimizing CBM is challenging when dealing with complex degradation patterns, uncertain environments, and interacting components. This research examines how Markov decision processes (MDPs) and their variants are increasingly applied to support effective sequential maintenance decisions. By analyzing modeling frameworks, optimization criteria, multi-component interactions, and emerging reinforcement learning strategies, the study outlines a structured pathway for designing dynamic, cost-efficient maintenance policies. The findings highlight the importance of balancing system reliability, operational continuity, and computational feasibility in modern CBM optimization.

Industrial systems today rely heavily on advanced sensing and monitoring technologies to detect degradation and prevent catastrophic failures. While traditional maintenance relies on scheduled replacements, such strategies may either waste resources or fail to prevent unexpected breakdowns. Condition-based maintenance (CBM) enables maintenance only when needed, but real systems often have uncertain failure behaviors, coupled dependencies, and multiple performance constraints that complicate decision-making. Furthermore, analytical models may struggle to represent high-dimensional equipment data or dynamic operating conditions. Based on these challenges, developing more adaptive and structured decision frameworks becomes essential. Due to these issues, deeper research is needed to optimize maintenance strategies by capturing uncertainty, dynamics, and cost trade-offs.

This study was conducted by researchers from Tianjin University, the ZJU-UIUC Institute at Zhejiang University, and the National University of Singapore, and was published (DOI: 10.1007/s42524-024-4130-7) in Frontiers of Engineering Management in 2025. The review analyzes how Markov decision processes and their extensions are applied to optimize condition-based maintenance across single and multi-component systems. It highlights advances in maintenance scheduling under uncertainty, integration with production and inventory decisions, and the growing role of reinforcement learning in adaptive maintenance planning.

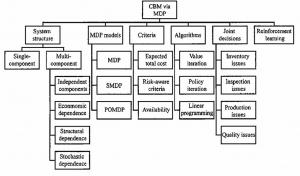

The review identifies MDPs as a powerful framework for modeling maintenance as a sequential decision-making problem, where the system state evolves stochastically and actions determine long-term outcomes. Standard MDP-based CBM models typically minimize lifetime maintenance costs, but variants such as risk-aware models also consider safety and reliability targets. To address real-world uncertainty, POMDPs handle cases where system states are only partially observable, while semi-Markov decision processes allow for irregular inspection and repair intervals. For multi-component systems, the review describes how dependencies—such as shared loads, cascading failures, and economic coupling—significantly complicate optimization and often require higher-dimensional decision models. To manage computational complexity, researchers have applied approximate dynamic programming, linear programming relaxations, hierarchical decomposition, and policy iteration with state aggregation. Finally, reinforcement learning methods are emerging to learn optimal maintenance strategies directly from data without requiring full system knowledge, though challenges remain in data availability, stability, and convergence speed. The review emphasizes that combining modeling, optimization, and learning offers strong potential for scalable CBM.

The authors emphasize that MDP-based CBM aligns well with real operational needs because it supports dynamic, state-based decision-making under uncertainty. They note that as systems become more complex and sensor data more abundant, the ability to integrate multi-source information into maintenance planning will be increasingly critical. They also highlight reinforcement learning as a promising direction, particularly for environments where system parameters cannot be fully defined in advance. However, they caution that practical implementation requires attention to computational efficiency, data quality, and interpretability to ensure reliable field deployment.

This research provides guidance for industries where reliability is essential, including manufacturing, transportation, power infrastructure, aerospace, and offshore energy. More adaptive maintenance strategies derived from MDPs and reinforcement learning can reduce unnecessary downtime, lower operational costs, and prevent safety-critical failures. The review suggests that future industrial maintenance platforms will integrate real-time equipment diagnostics with automated decision engines capable of continuously updating optimal policies. Such systems could support predictive planning across entire production networks, enabling safer, more economical, and more resilient industrial operations.

DOI

10.1007/s42524-024-4130-7

Original Source URL

https://doi.org/10.1007/s42524-024-4130-7

Funding information

This research was supported by the National Natural Science Foundation of China (Grant Nos.72401253,72371182,72002149,and 72271154), and the National Social Science Fund of China (23CGL018), the State Key Laboratory of Biobased Transportation Fuel Technology, China (Grant No.512302-X02301) and a start-up grant from the ZJU-UIUC Institute at Zhejiang University (Grant No.130200-171207711).

Lucy Wang

BioDesign Research

email us here

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.